Campus Hiring in India 2025: How AI Can Help You Pick the Right Freshers Faster

Table of Contents

Introduction

The buzz on LinkedIn and Twitter is palpable: campus hiring season in India is becoming a logistical nightmare. For every startup founder or hiring manager in Bangalore or Hyderabad, the challenge is the same. How do you efficiently sift through thousands of applications from fresh graduates to find the few who genuinely fit your team's needs, without drowning in resumes or letting unconscious bias creep in? The volume is staggering, and the pressure to move quickly means good candidates can easily slip through the cracks. This isn't just about filling entry-level positions; it's about building the foundation of your company's future. In 2025, the answer increasingly lies not in hiring more recruiters, but in intelligently leveraging Artificial Intelligence. However, the conversation has matured beyond simple automation. It's now about building AI systems that augment human decision-making, mitigate historical biases, and provide the explainability needed to trust their recommendations. This article will explore how modern AI, grounded in recent research, can transform campus hiring from a frantic race into a strategic, data-driven process. We will delve into the technical frameworks that make this possible, from debiasing algorithms to systems that support human critical thinking, and outline a practical approach for implementation.

The Core Challenge: Volume, Velocity, and the Bias Blind Spot

The fundamental problem with campus hiring is its scale. A single drive at a major engineering college can generate thousands of applications. Traditional manual screening is slow, inconsistent, and notoriously vulnerable to cognitive biases. Recruiters, often pressed for time, may unconsciously favour candidates from familiar colleges, with certain keywords on their resumes, or with backgrounds similar to their own. This "bias blind spot" limits diversity and overlooks high-potential candidates from non-traditional backgrounds. Automated resume screening tools have existed for years, but early versions often perpetuated these biases by learning from historical, biased hiring data. If a company has historically hired mostly from a few top-tier institutes, an AI trained on that data will simply reinforce that pattern, creating a feedback loop that shuts out talent from newer or less-known universities. The goal for 2025 is to move beyond this. The question is not just "Can AI screen resumes?" but "Can AI help us screen resumes more fairly and insightfully than we can alone?"

Augmenting Critical Thinking, Not Replacing It

A key insight from recent research is the distinction between demonstrated and performed critical thinking. As outlined by Katelyn Xiaoying Mei and Nic Weber (2025), demonstrated critical thinking is what a candidate shows you directly—like in a well-crafted project report or a polished answer in an interview. Performed critical thinking is the actual, often messy, cognitive process they use to solve problems. In the era of generative AI, candidates can use tools to enhance their demonstration of skills, which may not perfectly reflect their innate performance ability. An effective AI-powered hiring system must be designed to augment the hiring manager's ability to assess both. Instead of just filtering resumes, the AI should act as a sensemaking partner. The framework proposed by Mei and Weber suggests designing AI interactions that surface the reasoning behind a candidate's work, prompting recruiters to probe deeper. For example, an AI tool could analyse a candidate's coding project and not just score it, but also flag areas where the solution is particularly innovative or where it follows a common template, prompting the recruiter to ask specific questions about the development process during the interview. This shifts the AI's role from a passive filter to an active participant in developing a more holistic understanding of the candidate.

A Technical Deep Dive: Debiasing and Sensemaking Frameworks

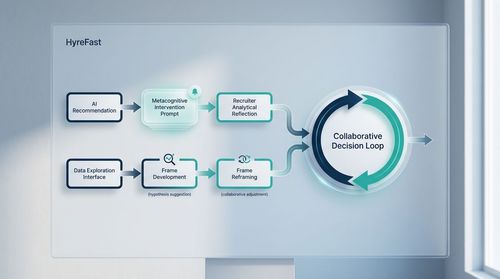

Simply hoping an AI will be fair is not a strategy; fairness must be engineered. This is where cutting-edge research becomes directly applicable. Chaeyeon Lim's 2025 paper, "DeBiasMe: De-biasing Human-AI Interactions with Metacognitive Interventions," offers a practical framework. The DeBiasMe approach uses metacognitive interventions—essentially, prompts that encourage the user (the recruiter) to reflect on their own decision-making process. In a campus hiring context, this could work as follows:

- The AI screens a resume and provides a recommendation (e.g., "Shortlist").

- Before the recruiter accepts the recommendation, the system intervenes with a prompt like: "This candidate is from a college not frequently in our hiring pool. Based on the resume, what specific skills are contributing to the shortlist recommendation?"

- This forces the recruiter to engage analytically with the AI's suggestion, surface their own potential biases, and focus on job-relevant criteria. This aligns with another powerful concept: data-frame theory of sensemaking. Chengbo Zheng et al. (2025), in their paper "Supporting Data-Frame Dynamics in AI-assisted Decision Making," propose that effective decision-making is a loop where we fit data into mental frames (hypotheses) and reshape those frames based on new data. Their mixed-initiative framework gives the AI and the human distinct but collaborative roles. An AI system for hiring built on this principle would:

- Support Data Exploration: Allow the recruiter to query the candidate pool in flexible ways (e.g., "show me candidates with strong open-source contributions, regardless of GPA").

- Help Frame Development: The AI suggests initial hypotheses or frames. For instance, "Candidate A displays a pattern of rapid skill acquisition based on their sequential projects."

- Facilitate Frame Reframing: If the recruiter rejects the AI's hypothesis, the system helps them articulate a new one, creating a learning loop for both parties. This ensures the AI is a tool for exploration, not just a black-box gatekeeper.

Building an End-to-End AI-Augmented Hiring Pipeline

So, what does this look like in practice? Here is a flow for a modern campus hiring system:

- Intelligent Parsing & Enrichment: AI parses resumes and portfolios (including GitHub links), extracting not just skills but also meta-skills like learning agility and project complexity. It standardises this data into a structured candidate profile.

- Bias-Audited Initial Scoring: A machine learning model scores candidates based on job-specific criteria. Critically, this step is preceded by a debiasing process that involves continuous auditing of the model's recommendations for fairness across protected attributes (like gender or college tier). Tools like DeBiasMe can be integrated here to prompt recruiters during quality checks.

- Sensemaking Dashboard: Instead of a simple ranked list, recruiters access a dashboard. Here, they see candidate profiles, the AI's scores, and—most importantly—transparent explanations for each score. For example: "High score on problem-solving: evidenced by implementing an original algorithm in Project X." This is the explainability component in action.

- Predictive Analytics for Fit and Retention: The system leverages predictive analytics to flag candidates whose profiles correlate with long-term success and retention at your company, based on anonymised data from current high-performing employees.

- The Closed Feedback Loop (Integration with LMS): Once hired, the new employee's performance data from the Learning Management System (LMS) and performance reviews is fed back into the hiring algorithm. This continuous feedback loop refines the model over time, making it smarter and more attuned to what constitutes success in your specific organisation.

Challenges and Mitigations for the Indian Context

Implementing this is not without challenges. In our context, data privacy is a paramount concern. Collecting and processing candidate data requires robust security and clear communication. Secondly, there is a skills gap. Hiring managers and recruiters need training to interpret AI recommendations critically, not blindly follow them. The goal is augmentation, not automation. A major technical challenge is the initial lack of high-quality, unbiased data to train the models. For a new startup, historical data may be sparse or non-existent. A practical mitigation is to start with a rules-based system for initial filtering and gradually introduce machine learning models as your own hiring data—collected and audited for fairness from day one—becomes available. Jugaad solutions rarely scale here; we need robust, ethically-grounded systems from the outset.

Conclusion: A Balanced Partnership

The most successful campus hiring strategies in 2025 will be those that strike a balance between AI's efficiency and human intuition.

- AI excels at processing vast datasets, identifying patterns, and performing consistent, initial audits for bias. It handles the volume.

- Humans excel at nuanced judgement, assessing cultural fit, and understanding the context behind a candidate's journey. They handle the value. The research we've examined shows that the most powerful systems are mixed-initiative. They don't seek to replace the recruiter but to partner with them, using frameworks like data-frame theory and metacognitive interventions to lead to more thoughtful, fair, and effective hiring decisions. By focusing on explainability, debiasing, and continuous learning, companies can build a hiring engine that is not only faster but also smarter and more equitable.

Future Directions

The field is evolving rapidly. Based on the current research trajectory, we can expect:

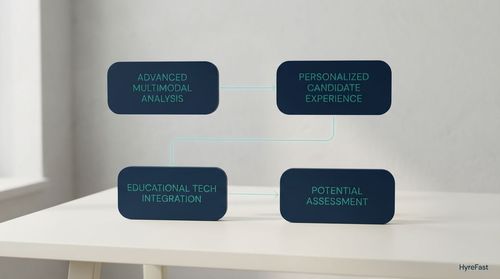

- Advanced Multimodal Analysis: AI that can analyse video interviews for communication skills and cognitive cues, integrating that data with resume and coding-test information.

- Personalised Candidate Experience: AI-driven systems that can answer candidate queries, schedule interviews, and provide personalised feedback, improving the employer brand.

- Deeper Integration with Educational Tech: Direct, privacy-conscious linkages with university LMS to validate skills and projects, creating a more verified talent profile.

- Focus on Potential over Pedigree: Models will get better at identifying latent potential and learning agility, reducing over-reliance on brand-name colleges and standardised scores. Adopting these AI-driven approaches will be key for Indian companies looking to scale efficiently while building diverse, high-performing teams from the ground up. References

- Lim, C. (2025). DeBiasMe: De-biasing Human-AI Interactions with Metacognitive AIED (AI in Education) Interventions. [ArXiv]. (Framework for metacognitive debiasing interventions).

- Mei, K. X., & Weber, N. (2025). Designing AI Systems that Augment Human Performed vs. Demonstrated Critical Thinking. [ArXiv]. (Framework for augmenting critical thinking assessment).

- Zheng, C., Miller, T., Bialkowski, A., Soyer, H. P., & Janda, M. (2025). Supporting Data-Frame Dynamics in AI-assisted Decision Making. [Core Literature]. (Mixed-initiative framework based on data-frame theory of sensemaking).