Hiring Without HR: A Founder’s Playbook for Screening Candidates at Scale

Table of Contents

Introduction

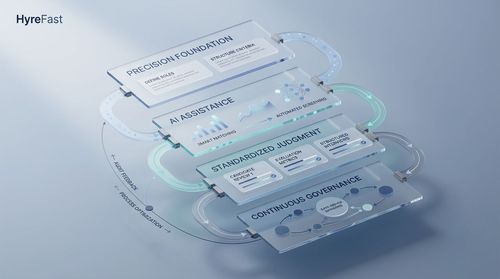

The chatter on social media and tech forums is clear: AI will either revolutionize hiring or bury it in bias. Founders, especially in fast-moving startup ecosystems from Bangalore to San Francisco, feel this tension acutely. When you’re scaling rapidly and an HR department is a luxury you can’t yet afford, the promise of algorithmic hiring is irresistible. The risk, however, is creating a system that is not only inefficient but unfair, potentially alienating the very talent you need to succeed. This dilemma is more than just a scaling problem; it's a foundational challenge for building a healthy company culture from the ground up. As a founder, you are the first gatekeeper. The processes you set in motion today will define your team's composition for years to come. Relying on gut feeling alone doesn't scale, but blindly trusting black-box algorithms can be disastrous. The key is to build a systematic, transparent, and fair screening pipeline that acts as a force multiplier for your limited time. This playbook will walk you through a four-phase approach to screening candidates at scale, grounded in current research, focusing on how to leverage technology responsibly, standardise human judgment, and implement the governance necessary to build a great team.

The Foundational Problem: Speed vs. Fairness

The core challenge of hiring without a dedicated HR function is the direct trade-off between speed and thoroughness. The pressure to fill roles quickly is immense, but cutting corners in screening can lead to costly mis-hires and homogeneous teams. Research highlights a critical concern: the "gatekeeper effect," where the mere design of your hiring process can inadvertently cause high-quality applicants from under-represented groups to self-select out of the process. If your system is perceived as opaque or biased, you may never even see the best candidates. Furthermore, studies have shown that the very tools promising efficiency, such as Large Language Models (LLMs) used for resume screening, can perpetuate and even amplify societal biases. For instance, one study found that LLMs can exhibit bias against certain groups, such as Black males. This isn't just an ethical issue; it's a strategic one. A lack of diversity can stifle innovation and limit your startup's ability to understand its market. The goal, therefore, is not to replace human judgment but to augment it with tools that are faster, more consistent, and—with careful design—less biased than unstructured human screening alone.

Phase 1: Define Your Target with Extreme Precision

Before a single line of code is written or a resume is screened, you must crystallise what success looks like for the role. This goes far beyond a standard job description. In the context of algorithmic hiring, ambiguity is the enemy of fairness. A vague criterion like "strong communication skills" is interpreted differently by every reviewer and is nearly impossible for an algorithm to assess consistently. Instead, break down the role into measurable, binary, or scaled competencies. For a software engineer, this might include:

- Hard Skills: Proficiency in Python (Scale: 1-5), Experience with AWS infrastructure (Binary: Yes/No).

- Project Scope: Has shipped a product feature end-to-end (Binary: Yes/No).

- Contextual Fit: Experience in early-stage startup environments (Binary: Yes/No). This level of precision does two things. First, it creates a standardised benchmark for evaluation, reducing the noise of individual bias. Second, it provides the clear labelling necessary if you plan to train or fine-tune a model. The output of this phase is a structured scorecard that will guide both automated and human evaluators.

Phase 2: Leverage LLMs as a Force Multiplier, Not a Final Judge

With a precise scorecard in hand, you can now deploy technology to handle the initial volume. LLMs are exceptionally good at parsing unstructured text (like resumes and cover letters) and extracting structured information. The key is to use them for narrow, well-defined tasks rather than making holistic judgments. Practical Application:

- Task: Instead of asking "Is this a good candidate?", program the LLM to answer: "From the resume, extract evidence for each competency on the scorecard."

- Example: For the competency "Experience with AWS infrastructure," the LLM's job is to scan the resume for mentions of specific services (e.g., S3, EC2, Lambda) and the context of their use. It can then output a confidence score or flag the resume for human review if evidence is found. This approach mitigates risk. The LLM is not deciding who is a good candidate; it is merely a high-speed information retrieval system. It surfaces relevant data points based on your pre-defined criteria. This is where the hybrid approach is critical. The LLM handles the sifting, allowing your human reviewers to focus on the much more complex task of evaluating the quality and context of that evidence.

Phase 3: Standardise the Human Evaluation

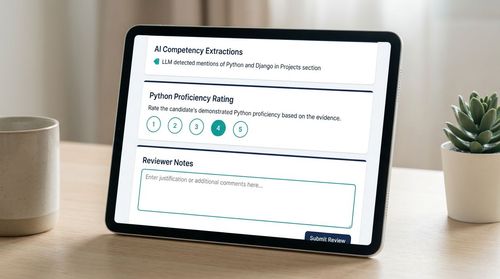

Automation gets you a shortlist; human judgment makes the decision. However, without structure, human evaluation can reintroduce the bias and inconsistency you sought to avoid. The second practical takeaway from research is to standardise how humans interact with the AI-generated data. Create a structured review interface for your team. When a reviewer opens a candidate profile, they should see:

- The AI-generated extractions for each competency (e.g., "LLM detected mentions of Python and Django in Projects section").

- A clear prompt for the human reviewer to make a calibrated judgment (e.g., "Based on the evidence, rate the candidate's Python proficiency from 1-5.").

- A required field for the reviewer to justify their rating with specific notes. This process forces a dialogue between the AI's output and the reviewer's expertise. It also creates auditable data. If you notice a reviewer consistently rating candidates higher or lower than their peers, you have a clear signal for calibration and training. This standardisation is a form of active governance that ensures the "human in the loop" is an asset, not a bottleneck or a source of error.

Phase 4: Implement Continuous Governance and Auditing

Adopting algorithmic technology is not a one-time event. It requires continuous governance. Research emphatically states that without active auditing, these systems can drift and produce biased outcomes over time. As a founder, you must build auditing into your weekly or monthly rhythm. Key Auditing Questions:

- Demographic Fairness: Are candidates from certain backgrounds being systematically screened out at a higher rate? You can audit this by analysing pass rates across different groups at each stage of your pipeline. Research suggests that measures like affirmative action can be effective in addressing such biases, which could mean manually reviewing a sample of rejected applications from under-represented groups.

- Predictive Validity: Are the candidates who score well in your screening process actually performing well on the job? Correlating screening scores with later performance data (e.g., from performance reviews) is the ultimate test of your system's effectiveness.

- Model Drift: Is the LLM's performance changing as it encounters new data or as your job requirements evolve? Regularly testing the model on a set of benchmark resumes can help detect this. This phase turns your hiring pipeline from a static tool into a learning system. The data you collect from both the AI and the human reviewers becomes your most valuable asset for refining your process and ensuring it remains fair and effective.

Navigating the Inherent Trade-offs

This approach requires acknowledging and managing several trade-offs:

- Speed vs. Thoroughness: A highly granular scorecard and review process takes more time to set up but saves time and improves quality in the long run. Optimise for thoroughness when hiring for critical, hard-to-fill roles.

- Cost vs. Accuracy: Building a customised screening pipeline has an upfront time cost. Using off-the-shelf tools is cheaper but may be less aligned with your specific needs and more opaque. For early-stage startups, start with a heavily guided off-the-shelf tool and gradually customise as you scale.

- Automation vs. Human Touch: Over-automation can lead to a cold candidate experience. Ensure there are clear points of human contact, especially for candidates who reach the final stages. The goal is to use automation to free up human time for meaningful interaction.

Conclusion: Building for Scale and Fairness

Building a hiring process without HR is a significant technical and ethical undertaking. The journey from a flood of applications to a curated shortlist of excellent candidates is not about finding a magic algorithm. It is about building a robust, transparent system.

- Precision is the foundation. Vague job descriptions produce noisy, biased outcomes. Your scorecard is your first and most important line of defence.

- AI is an assistant, not an arbiter. Use LLMs for what they are good at—speedy information retrieval—not for making final decisions.

- Standardise human judgment. A structured review process is essential to mitigate human bias and create consistent, auditable outcomes.

- Governance is continuous. Regularly audit your pipeline for fairness and accuracy. Your hiring system is a product that requires ongoing iteration and improvement. By embracing this hybrid, governance-focused approach, founders can create a scalable hiring machine that is not only efficient but also fair and transparent, laying the groundwork for a diverse and innovative team.

Future Directions

The field of algorithmic hiring is evolving rapidly. Founders should keep an eye on several emerging trends:

- Explainable AI (XAI) for Hiring: Future tools may provide clearer explanations for why a candidate was flagged for a particular skill, moving beyond black-box scoring to actionable insights.

- Integration with Internal Mobility: The same principles used for external hiring can be applied internally to identify talent for upskilling and internal role changes, creating a more dynamic workforce.

- Bias Mitigation as a Standard Feature: We can expect a new generation of tools that have bias detection and mitigation capabilities built-in, rather than added as an afterthought.

- Regulatory Landscape: As governments worldwide take greater interest in algorithmic fairness, compliance and transparency will become even more critical components of the hiring tech stack.

References

- Study on LLM bias in resume screening against Black males. [Link to ArXiv or relevant academic paper].

- Research on the "gatekeeper effect" in hiring algorithms. [Link to Core Lit source].

- Multidisciplinary survey on algorithmic hiring and fairness. [Link to ArXiv or relevant academic paper].

- Research on affirmative action measures in addressing hiring biases. [Link to academic source].

- Industry perspectives on the adoption and governance of hiring technology. [Link to Web/Social Media agent source].